Writeup – GPN CTF 2025: Honeypot

TLDR: Our commands trigger eBPF syscall hooks which each correspond to a move in a labyrinth. Once we beat the labyrinth, the hook decrypts the flag.

Honeypot was a reverse engineering challenge by MisterPine at GPN CTF 2025.

- Points: 244/500

- Solves: 16

I’ve just bought this property in a very priviledged part of the system.

But there seem to be(e) awfully many bees around. I just hope I can find a way out of this thing the developer has constructed here before I get stung…

First blood price: For this challenge there is a separate first blood prize in form of a topical T-Shirt! Sadly we have to limit this prize to either on-site collection at GPN or shipping within Germany.

Uh, a t-shirt? That’s easy bait for me. So, upon start of the CTF, I jumped right into this.

Challenge Setup

We’re given a few files to work with:

flagcontains some non-ASCII bytes, likely the encrypted flag.Honeypot.jaris a Java application, which seems like our main target.running.mddescribes the requirements and instructions to run the challenge.

Interestingly, one of the requirements islibbpf, so it looks like we might expect some eBPF magic.run.shis the entrypoint to run the challenge. so, we’ll start with that.

run.sh launches the jar in the background, and waits for a SIGUSR1. This is triggered by the jar once it has finished starting up, as we’ll see later.

In an infinite loop, we can enter h, t, c or b to execute head, tail, cat or base64 on the flag file.

But if we actually try this, the results are very different from what we’d expect. Entering one of the letters sometimes results Nope, want to try something else? and other times just Failed, upon which the script terminates.

Something really odd must be happening to our processes, so let’s dive into Honeypot.jar to see what’s going on.

Running Java in the Kernel?

We can throw the jar into jadx to reveal what’s inside. Right away in resources, it’s notable that there is a native extension de.mr_pine.honeypot.scheduler.HoneypotImpl.o.

In the decompiled sources, there are two relevant packages:

de.mr_pine.honeypot, the main challenge logic.me.bechberger.ebpfappears to be the Hello eBPF library.

This library allows writing eBPF applications in Java which is possible by basically transpiling Java classes into C, which are then compiled into eBPF bytecode. eBPF is a technology that allows running sandboxed Linux kernel extensions, enabling features like syscall hooks and implementing custom kernel components that can be deployed at runtime. So this is likely where the native extension comes from and what could be messing with our processes.

The Main class in de.mr_pine.honeypot is the jar’s entrypoint and mostly just runs the Honeypot class.

That class inherits from BPFProgram, which is the base class for eBPF programs in Hello eBPF. Most functionality here has likely been moved out into the native extension, though there are still some interesting findings here.

This includes some array constants C1 and C2 and bindings for variables var1 through var12 which we will rediscover in the eBPF program later.

1public static Object run(String sleepPid, String[] killCommand) throws NoSuchAlgorithmException, IOException {

2 boolean has_failed = false;

3 Honeypot program = (Honeypot) BPFProgram.load(Honeypot.class);

4 try {

5 program.autoAttachPrograms();

6 // ...

7 program.attachScheduler();

8 Runtime.getRuntime().exec(new String[]{"kill", "-SIGUSR1", sleepPid});

9 boolean placedKey = false;

10 // ...

11 while (true) {

12 if (program.var2.get().booleanValue() && !placedKey) {

13 MessageDigest digest = MessageDigest.getInstance("SHA-512");

14 Object[] collectedWrappedBytes = program.var6.get();

15 byte[] collectedBytes = new byte[collectedWrappedBytes.length];

16 for (int i = 0; i < collectedWrappedBytes.length; i++) {

17 collectedBytes[i] = ((Byte) collectedWrappedBytes[i]).byteValue();

18 }

19 byte[] digestBytes = digest.digest(collectedBytes);

20 Byte[] flagXor = new Byte[64];

21 for (int i2 = 0; i2 < flagXor.length; i2++) {

22 flagXor[i2] = Byte.valueOf(digestBytes[i2]);

23 }

24 program.var10.set(flagXor);

25 placedKey = true;

26 }

27 if (program.var1.get().booleanValue()) {

28 if (!has_failed) {

29 System.out.println("Failed");

30 }

31 has_failed = true;

32 Runtime.getRuntime().exec(killCommand);

33 }

34 }

35 } catch (Throwable th) {

36 // ...

37 }

38}

The run method appears to load the eBPF program and attach it to the kernel as a scheduler as well as a hook for syscalls (hinted also by the fact that it implements the interfaces Scheduler and SystemCallHooks). After this, it notifies the shell script that it has started up by sending a SIGUSR1. Finally, it enters an infinite loop waiting for either of the flags var1 or var2 to be set. If var1 is set, we run into a failure condition and all related processes are killed.

var2 being set seems a lot more interesting. Values from var6 are collected and hashed into flagXor, before being stored back into var10. This is likely the key to decrypt the flag.

The other class, HoneypotImpl, extends Honeypot and adds more boilerplate code for variable bindings and other things generated by Hello eBPF. Though in a few annotations and the method getCodeStatic(), we have some really long strings. It turns out that these are pieces of the generated C code for the eBPF program, which we can find in the native extensions. I confirmed this with a quick comparison in Ghidra.

As we found out after the CTF, the C sources being present here was not actually intended by the author but certainly made my life a whole lot easier, as at first glance some functionality was seemingly missing from the initial decompiler output.

Playing Labyrinth with my Scheduler

The core C logic is found in getCodeStatic() in HoneypotImpl. It defines a sched-ext scheduler and several syscall hooks: enter_openat, enter_read, and exit_read.

1s32 BPF_STRUCT_OPS_SLEEPABLE(sched_init) {

2 var4[0] = (var3[0]) ^ (1L << 63);

3 s32 result = scx_bpf_create_dsq(C3, -1) + scx_bpf_create_dsq(C4, -1);

4 return result;

5 }

6int BPF_STRUCT_OPS(sched_enqueue, struct task_struct *p, u64 enq_flags) {

7 bool shouldBeBlocked = ({auto ___pointery__0 = (*(p)).pid; !bpf_map_peek_elem(&var8, &___pointery__0);});

8 if ((shouldBeBlocked)) {

9 bool *entry = ({auto ___pointery__0 = (*(p)).pid; bpf_map_lookup_elem(&var9, &___pointery__0);});

10 shouldBeBlocked = (entry != NULL) && (*(entry));

11 }

12 s64 dsqId = (long)(SCX_DSQ_GLOBAL);

13 if ((shouldBeBlocked)) {

14 dsqId = C3;

15 } else {

16 dsqId = C4;

17 }

18 u32 baseSlice = 5000000; …In the sys_enter_openat hook, if the opened file is flag, the PID of the process is pushed into var8 and var9. Also, as long as var2 is not set, we get the output we recognized from earlier. It also calls f2 with the result of f1, which checks the name of the process and returns an enum value corresponding to the command we entered.

The sys_enter_read looks only at processes that have been stored in var8 and var9, and stores their read buffer.

Finally, the sys_exit_read hook XORs the read buffer with the key stored in var10 and writes it back to the buffer. var10 is the key injected by the Java code once var2 is set to true.

Clearly, the key to the whole thing is getting var2 to be true, which happens in f2.

f2 implements bit-level logic on var3 and var4. Back in the Java code, we saw that these variables are both initialized with C1, which is an array of 64 longs. We will treat them here as 2D bit arrays.

First, row and col are determined by the lowest indices where var3 and var4 differ. Then, in all four cases, var3 is initially set to the current state of var4. Then, depending on the command we entered, the bit either above, below, left, or right of the current position is set to 1 in var4. Finally, there’s a check to see if var3 and var4 are equal in the current row. If they are, var1 is set to true, which will trigger the failure condition in the Java code.

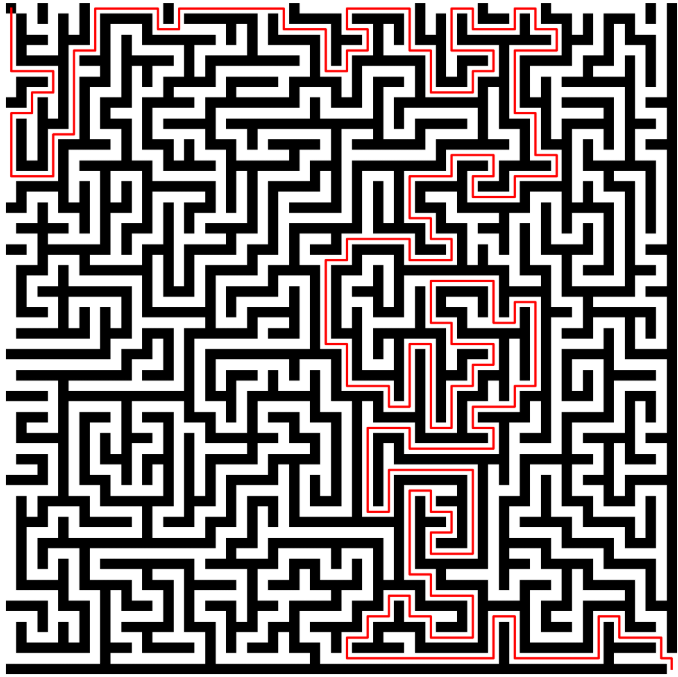

Thinking about this for a minute, we can notice that this is a labyrinth, where a 1 in var3 indicates a wall and a 0 indicates a path, and it’s controlled by our commands back in the shell script.

If we have reached the final row, var2 is set to true, which will then trigger the Java code to derive the key flag.

Also, var5[row * 64 + col] is appended to var6. var5 is the large array of bytes C2 found back in the Java code. Remember that var6 is used to derive the flag XOR key, so ultimately the key is derived from the path we take through the labyrinth.

The scheduling functions do not really add much that would help us solve the challenge. They just block processes stored in var8 and var9 until var2 has been set, meaning they are held up until the labyrinth has been solved.

To solve the labyrinth, I extracted C1 as a bit array and had an LLM implement breadth-first search.

1[...]

2def bfs(maze, start, goal):

3 visited = np.full(maze.shape, False)

4 prev = np.full(maze.shape, None)

5 queue = deque([start])

6 visited[start] = True

7

8 while queue:

9 current = queue.popleft()

10 if current == goal:

11 break

12 for d in directions:

13 ni, nj = current[0] + d[0], current[1] + d[1]

14 if 0 <= ni < maze.shape[0] and 0 <= nj < maze.shape[1]:

15 if not visited[ni, nj] and maze[ni, nj] == 0:

16 visited[ni, nj] = True

17 prev[ni, nj] = current

18 queue.append((ni, nj))

19

20 # Reconstruct path

21 path = []

22 cell = goal

23 while cell != start:

24 path.append(cell)

25 cell = prev[cell]

26 if cell is None:

27 return None # No path

28 path.append(start)

29 path.reverse()

30 return path

31

32# Find path from top-left to bottom-right

33start = (0, 0)

34goal = (maze_size - 1, maze_size - 1)

35path = bfs(maze, start, goal)

36

37

38def path_to_directions(path):

39 dir_map = {

40 (-1, 0): 'h',

41 (1, 0): 't',

42 (0, -1): 'c',

43 (0, 1): 'b'

44 }

45 directions = []

46 for i in range(1, len(path)):

47 delta = (path[i][0] - path[i - 1][0], path[i][1] - path[i - 1][1])

48 directions.append(dir_map.get(delta, 'UNKNOWN'))

49 return directions

50

51directions_list = path_to_directions(path)

52"\n".join(directions_list)As backtracking is prevented, there is only one solution which you can see below.

With a small script, we can replay the path to win

1from pwn import *

2

3p = process('./run.sh ./honeypot.jar', shell=True)

4

5with open('path.txt', 'rb') as f:

6 steps = f.readlines()

7

8p.recvuntil(b'Enter your favourite way of printing your flag\n')

9

10for step in steps:

11 p.send(step.strip())

12 if i < len(steps) - 1 :

13 p.recvuntil(b'Nope, want to try something else?\n')

14p.interactive()

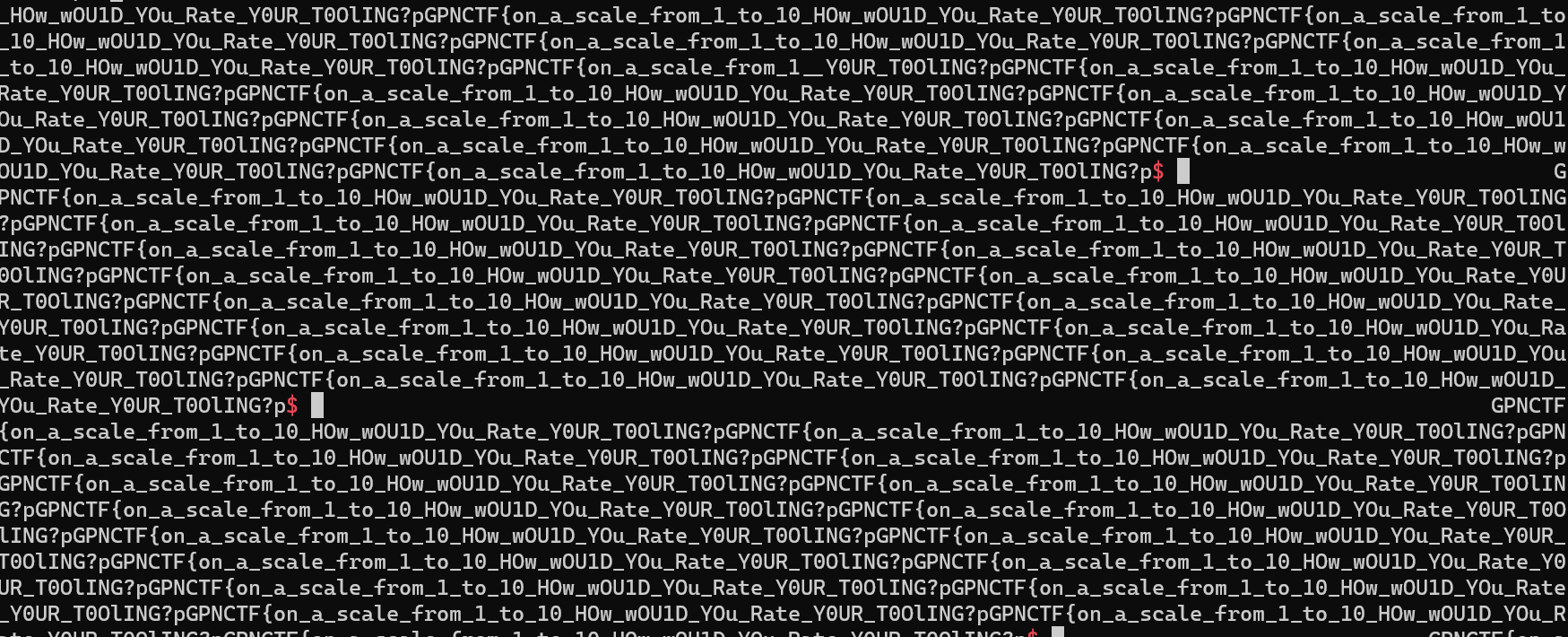

Since the processes are all blocked until the labyrinth has been solved, once we reach the end we get the flag printed out to our terminal nearly 500 times.

So, to summarize everything:

- When we run a command through the shell script, it makes a syscall to open the encrypted

flagfile. - The eBPF extension intercepts this and makes a move in the bitmap labyrinth based on the name of the process. From that point on, the process is blocked from being scheduled until the labyrinth has been solved.

- Once we reach the bottom right corner of the labyrinth, the XOR key is derived from the path we took. All the blocked processes are resumed, and the buffers into which the encrypted flag file has been read are decrypted with the key.

- We get a wall of flags printed to our terminal.

While I was just a few minutes short of first blood, I still got to explore quite an impressive project bridging Java and eBPF. If you’re interested in more fun with schedulers, I can recommend the author’s talk at GPN23.